Quickstart

Wolf runs as a single container, it’ll spin up and down additional containers on-demand.

Docker

-

Intel/AMD

-

Nvidia

Docker CLI:

docker run \

--name wolf \

--network=host \

-e XDG_RUNTIME_DIR=/tmp/sockets \

-v /tmp/sockets:/tmp/sockets:rw \

-e HOST_APPS_STATE_FOLDER=/etc/wolf \

-v /etc/wolf:/etc/wolf:rw \

-v /var/run/docker.sock:/var/run/docker.sock:rw \

--device /dev/dri/ \

--device /dev/uinput \

-v /dev/shm:/dev/shm:rw \

-v /dev/input:/dev/input:rw \

-v /run/udev:/run/udev:rw \

--device-cgroup-rule "c 13:* rmw" \

ghcr.io/games-on-whales/wolf:stableDocker compose:

version: "3.8"

services:

wolf:

image: ghcr.io/games-on-whales/wolf:stable

environment:

- XDG_RUNTIME_DIR=/tmp/sockets

- HOST_APPS_STATE_FOLDER=/etc/wolf

volumes:

- /etc/wolf/:/etc/wolf

- /tmp/sockets:/tmp/sockets:rw

- /var/run/docker.sock:/var/run/docker.sock:rw

- /dev/shm:/dev/shm:rw

- /dev/input:/dev/input:rw

- /run/udev:/run/udev:rw

device_cgroup_rules:

- 'c 13:* rmw'

devices:

- /dev/dri

- /dev/uinput

network_mode: host

restart: unless-stoppedUnfortunately, on Nvidia, things are a little bit more complex:

-

Your driver version must be

>= 530.30.02 -

--gpusand the Nvidia Docker Toolkit seems to not work (to be investigated)

First, let’s build an additional docker image that will contain the Nvidia driver files:

curl https://raw.githubusercontent.com/games-on-whales/gow/master/images/nvidia-driver/Dockerfile | docker build -t gow/nvidia-driver:latest -f - --build-arg NV_VERSION=$(cat /sys/module/nvidia/version) .This will create gow/nvidia-driver:latest locally.

Unfortunately, docker doesn’t seem to support directly mounting images, but you can pre-polulate volumes by running:

docker create --rm --mount source=nvidia-driver-vol,destination=/usr/nvidia gow/nvidia-driver:latest shIt will create a Docker container, populate nvidia-driver-vol with Nvidia driver if it wasn’t already done and remove the container.

Check volume exists with:

docker volume ls | grep nvidia-driver

local nvidia-driver-volOne last final check: we have to make sure that the nvidia-drm module has been loaded and that the module is loaded with the flag modeset=1.

sudo cat /sys/module/nvidia_drm/parameters/modeset

YI get N or the file is not present, how do I set the flag?

If using Grub, the easiest way to make the change persistent is to add nvidia-drm.modeset=1 to the GRUB_CMDLINE_LINUX_DEFAULT line in /etc/default/grub ex:

GRUB_CMDLINE_LINUX_DEFAULT="quiet nvidia-drm.modeset=1"

Then sudo update-grub and reboot.

For more options or details, you can see ArchWiki: Kernel parameters

You can now finally start the container; Docker CLI:

docker run \

--name wolf \

--network=host \

-e XDG_RUNTIME_DIR=/tmp/sockets \

-v /tmp/sockets:/tmp/sockets:rw \

-e NVIDIA_DRIVER_VOLUME_NAME=nvidia-driver-vol \

-v nvidia-driver-vol:/usr/nvidia:rw \

-e HOST_APPS_STATE_FOLDER=/etc/wolf \

-v /etc/wolf:/etc/wolf:rw \

-v /var/run/docker.sock:/var/run/docker.sock:rw \

--device /dev/nvidia-uvm \

--device /dev/nvidia-uvm-tools \

--device /dev/dri/ \

--device /dev/nvidia-caps/nvidia-cap1 \

--device /dev/nvidia-caps/nvidia-cap2 \

--device /dev/nvidiactl \

--device /dev/nvidia0 \

--device /dev/nvidia-modeset \

--device /dev/uinput \

-v /dev/shm:/dev/shm:rw \

-v /dev/input:/dev/input:rw \

-v /run/udev:/run/udev:rw \

--device-cgroup-rule "c 13:* rmw" \

ghcr.io/games-on-whales/wolf:stableDocker compose:

version: "3.8"

services:

wolf:

image: ghcr.io/games-on-whales/wolf:stable

environment:

- XDG_RUNTIME_DIR=/tmp/sockets

- NVIDIA_DRIVER_VOLUME_NAME=nvidia-driver-vol

- HOST_APPS_STATE_FOLDER=/etc/wolf

volumes:

- /etc/wolf/:/etc/wolf:rw

- /tmp/sockets:/tmp/sockets:rw

- /var/run/docker.sock:/var/run/docker.sock:rw

- /dev/shm:/dev/shm:rw

- /dev/input:/dev/input:rw

- /run/udev:/run/udev:rw

- nvidia-driver-vol:/usr/nvidia:rw

devices:

- /dev/dri

- /dev/uinput

- /dev/nvidia-uvm

- /dev/nvidia-uvm-tools

- /dev/nvidia-caps/nvidia-cap1

- /dev/nvidia-caps/nvidia-cap2

- /dev/nvidiactl

- /dev/nvidia0

- /dev/nvidia-modeset

device_cgroup_rules:

- 'c 13:* rmw'

network_mode: host

restart: unless-stopped

volumes:

nvidia-driver-vol:

external: trueIf you are missing any of the /dev/nvidia* devices you might also need to initialise them using:

sudo nvidia-container-cli --load-kmods infoOr if that fails:

#!/bin/bash

## Script to initialize nvidia device nodes.

## https://docs.nvidia.com/cuda/cuda-installation-guide-linux/index.html#runfile-verifications

/sbin/modprobe nvidia

if [ "$?" -eq 0 ]; then

# Count the number of NVIDIA controllers found.

NVDEVS=`lspci | grep -i NVIDIA`

N3D=`echo "$NVDEVS" | grep "3D controller" | wc -l`

NVGA=`echo "$NVDEVS" | grep "VGA compatible controller" | wc -l`

N=`expr $N3D + $NVGA - 1`

for i in `seq 0 $N`; do

mknod -m 666 /dev/nvidia$i c 195 $i

done

mknod -m 666 /dev/nvidiactl c 195 255

else

exit 1

fi

/sbin/modprobe nvidia-uvm

if [ "$?" -eq 0 ]; then

# Find out the major device number used by the nvidia-uvm driver

D=`grep nvidia-uvm /proc/devices | awk '{print $1}'`

mknod -m 666 /dev/nvidia-uvm c $D 0

mknod -m 666 /dev/nvidia-uvm-tools c $D 0

else

exit 1

fiWhich ports are used by Wolf?

To keep things simple the scripts above defaulted to network:host; that’s not really required, the minimum set of ports that needs to be exposed are:

# HTTPS

EXPOSE 47984/tcp

# HTTP

EXPOSE 47989/tcp

# Control

EXPOSE 47999/udp

# RTSP

EXPOSE 48010/tcp

# Video (up to 10 users)

EXPOSE 48100-48110/udp

# Audio (up to 10 users)

EXPOSE 48200-48210/udpMoonlight pairing

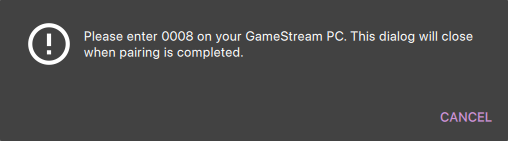

You should now be able to point Moonlight to the IP address of the server and start the pairing process:

-

In Moonlight, you’ll get a prompt for a PIN

-

Wolf will log a line with a link to a page where you can input that PIN (ex: http://localhost:47989/pin/#337327E8A6FC0C66 make sure to replace

localhostwith your server IP)

-

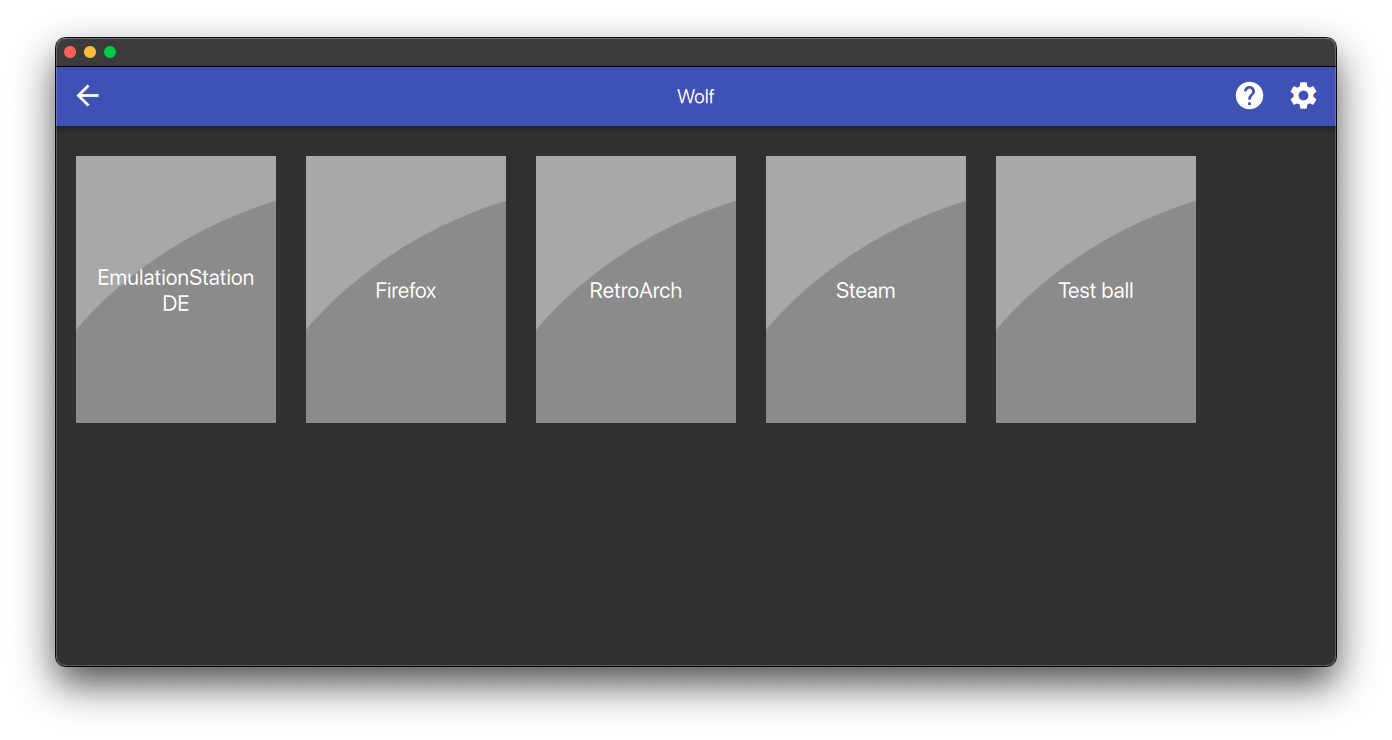

In Moonlight, you should now be able to see a list of the applications that are supported by Wolf

|

If you can only see a black screen with a cursor in Moonlight it’s because the first time that you start an app Wolf will download the corresponding docker image + first time updates. |

Virtual devices support

We use uinput to create virtual devices (Mouse, Keyboard and Joypad), make sure that /dev/uinput is present in the host:

ls -la /dev/uinput

crw------- 1 root root 10, 223 Jan 17 09:08 /dev/uinputinputsudo usermod -a -G input $USERudev rules under /etc/udev/rules.d/85-wolf-virtual-inputs.rules# Allows Wolf to acces /dev/uinput

KERNEL=="uinput", SUBSYSTEM=="misc", MODE="0660", GROUP="input", OPTIONS+="static_node=uinput"

# Move virtual keyboard and mouse into a different seat

SUBSYSTEMS=="input", ATTRS{id/vendor}=="ab00", MODE="0660", GROUP="input", ENV{ID_SEAT}="seat9"

# Joypads

SUBSYSTEMS=="input", ATTRS{name}=="Wolf X-Box One (virtual) pad", MODE="0660", GROUP="input"

SUBSYSTEMS=="input", ATTRS{name}=="Wolf PS5 (virtual) pad", MODE="0660", GROUP="input"

SUBSYSTEMS=="input", ATTRS{name}=="Wolf gamepad (virtual) motion sensors", MODE="0660", GROUP="input"

SUBSYSTEMS=="input", ATTRS{name}=="Wolf Nintendo (virtual) pad", MODE="0660", GROUP="input"What does that mean?

KERNEL=="uinput", SUBSYSTEM=="misc", MODE="0660", GROUP="input", OPTIONS+="static_node=uinput"

Allows Wolf to access /dev/uinput on your system.

It needs that node to create the virtual devices.

This is usually not the default on servers, but if that is already working for you on your desktop system, you can skip this line.

SUBSYSTEMS=="input", ATTRS{id/vendor}=="ab00", MODE="0660", GROUP="input", ENV{ID_SEAT}="seat9"

This line checks for the custom vendor-id that Wolf gives to newly created virtual devices and assigns them to seat9, which will cause any session with a lower seat (usually you only have seat1 for your main session) to ignore the devices.

SUBSYSTEMS=="input", ATTRS{name}=="Wolf X-Box One (virtual) pad", MODE="0660", GROUP="input"

SUBSYSTEMS=="input", ATTRS{name}=="Wolf PS5 (virtual) pad", MODE="0660", GROUP="input"

SUBSYSTEMS=="input", ATTRS{name}=="Wolf gamepad (virtual) motion sensors", MODE="0660", GROUP="input"

SUBSYSTEMS=="input", ATTRS{name}=="Wolf Nintendo (virtual) pad", MODE="0660", GROUP="input"

Now the virtual controllers are different, because we need to emulate an existing brand for them to be picked up correctly, so our virtual controllers have a vendor/product id resembling a real controller. The assigned name instead is specific to Wolf.

You can’t assign controllers a seat however (well - you can - but it won’t have the same effect), so we just give it permissions where only user+group can pick it up.

Reload the udev rules either by rebooting or run:

udevadm control --reload-rules && udevadm trigger